Video Description

One element that sets actuaries apart from related professions is our strict adherence to professionalism standards. Unlike the broad classification of "data scientists," we actuaries may sometimes perceive our precepts and actuarial standards as constraints limiting new technologies' full potential.

Using recent releases by the American Academy of Actuaries, the Society of Actuaries, and the Casualty Actuarial Society, I (Mary Pat Campbell) explore how actuaries can uphold these high standards while venturing into new technological areas, preserving our reputation for dependable results and high-quality insights. Furthermore, as a cautionary example, I examine instances where the lack of checks and controls in technological applications led to failures.

This was given as a pre-recorded segment at the 2024 Actuarial Tech Summit, hosted by Full Stack Actuarial: https://fullstackactuarial.com/

Feel free to skip the first couple of minutes, which was a buffer to wait for attendees to show up.

Slides

AI Whoopsies

The famous Woodrow Wilson In-Law, Hunter DeButts

ChatGPT, non-Expert Witness

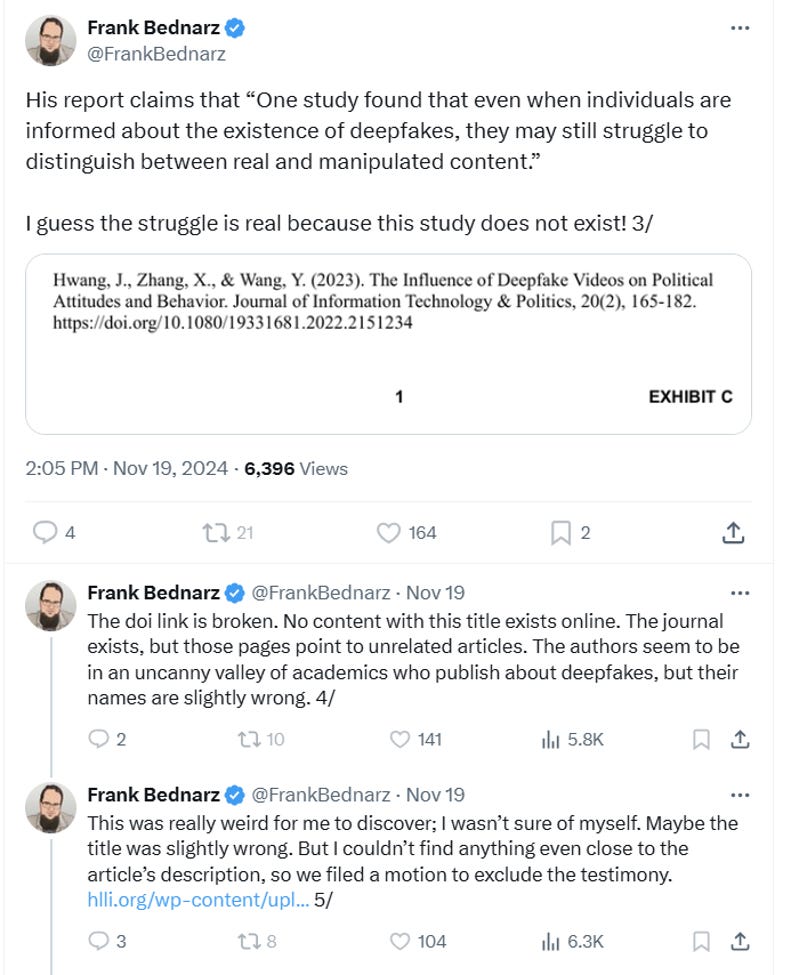

19 Nov 2024, Volokh Conspiracy: Apparent AI Hallucinations in AI Misinformation Expert's Court Filing Supporting Anti-AI-Misinformation Law

Minnesota recently enacted a law aimed at restricting misleading AI deepfakes aimed at influencing elections; the law is now being challenged on First Amendment grounds in Kohls v. Ellison. To support the law, the government defendants introduced an expert declaration, written by a scholar of AI and misinformation, who is the Faculty Director of the Stanford Internet Observatory. Here is ¶ 21 of the declaration:

[T]he difficulty in disbelieving deepfakes stems from the sophisticated technology used to create seamless and lifelike reproductions of a person's appearance and voice. One study found that even when individuals are informed about the existence of deepfakes, they may still struggle to distinguish between real and manipulated content. This challenge is exacerbated on social media platforms, where deepfakes can spread rapidly before they are identified and removed (Hwang et al., 2023).

The attached bibliography provides this cite:

Hwang, J., Zhang, X., & Wang, Y. (2023). The Influence of Deepfake Videos on Political Attitudes and Behavior. Journal of Information Technology & Politics, 20(2), 165-182. https://doi.org/10.1080/19331681.2022.2151234

But the plaintiffs' memorandum in support of their motion to exclude the expert declaration alleges—apparently correctly—that this study "does not exist":

No article by the title exists. The publication exists, but the cited pages belong to unrelated articles. Likely, the study was a "hallucination" generated by an AI large language model like ChatGPT….

27 Nov 2024, Volokh: Acknowledgment of AI Hallucinations in AI Misinformation Expert's Declaration in AI Misinformation Case

From the declaration filed today by the expert witness in Kohls v. Ellison (D. Minn.), a case challenging the Minnesota restriction on AI deepfakes in election campaigns:

[1.] I am writing to acknowledge three citation errors in my expert declaration, which was filed in this case on November 1, 2024 (ECF No. 23). I wrote and reviewed the substance of the declaration, and I stand firmly behind each of the claims made in it, all of which are supported by the most recent scholarly research in the field and reflect my opinion as an expert regarding the impact of AI technology on misinformation and its societal effects. Attached as Exhibit 1 is a redline version of the corrected expert declaration, and attached as Exhibit 2 is a redline version of the corrected list of academic and other references cited in the expert declaration.

[2.] The first citation error appears in paragraph 19 and cites to a nonexistent 2023 article by De keersmaecker & Roets. The correct citation for the proposition is to Hancock & Bailenson (2021), cited in paragraph 17(iv). The second citation error appears in paragraph 21, a citation to a nonexistent 2023 article by Hwang et al., and is identified by the Plaintiffs in their motion to exclude my declaration. The correct citation for that proposition is to Vaccari & Chadwick (2020), which appears in paragraph 20. The third citation error appears in Exhibit C to the declaration (ECF No. 23-1, at 39): the citation to Goldstein et al. lists the first author correctly, but the remaining authors are incorrect. The correct authors are Goldstein, J., Sastry, G., Musser, M., DiResta, R., Gentzel, M., and Sedova, K. I discovered the errors in paragraph 19 and Exhibit C when Plaintiffs brought the error in paragraph 21 to the Court's attention, and I re-reviewed my declaration.

[3.] I apologize to the Court for these three citation errors, and I explain how they came to be below. I did not intend to mislead the Court or counsel. I express my sincere regret for any confusion this may have caused. That said, I stand firmly behind all of the substantive points in the declaration. Both of the correct citations were already cited in the original declaration and should have been included in paragraphs 19 and 21. The substantive points are all supported by the scientific evidence and correcting these errors does not in any way alter my original conclusions.

…..

[11.] The two citation errors, popularly referred to as "hallucinations," likely occurred in my use of GPT-4o, which is web-based and widely used by academics and students as a research and drafting tool. "Hallucinated citations" are references to articles that do not exist. In the drafting phase I sometimes cut and pasted the bullet points I had written into MS Word (based on my research for the declaration from the prior search and analysis phases) into GPT-4o. I thereby created prompts for GPT-4o to assist with my drafting process. Specifically for these two paragraphs, I cannot remember exactly what I wrote but as I want to try to recall to the best of my abilities, I would have written something like this as a prompt for GPT-4o: (a) for paragraph 19: "draft a short paragraph based on the following points: -deepfake videos are more likely to be believed, -they draw on multiple senses, - public figures depicted as doing/saying things they did not would exploit cognitive biases to believe video [cite]"; and (b) for paragraph 21: "draft a short paragraph based on the following points: -new technology can create realistic reproductions of human appearance and behavior, -recent study shows that people have difficulty determining real or fake even after deepfake is revealed, -deepfakes are especially problematic on social media [cite]."

….

Academic GenAI Use

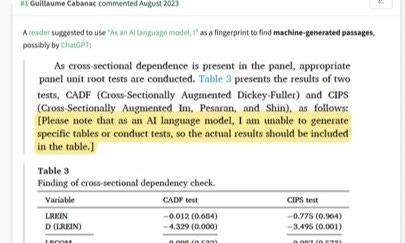

RetractionWatch: Papers and peer reviews with evidence of ChatGPT writing

Retraction Watch readers have likely heard about papers showing evidence that they were written by ChatGPT, including one that went viral. We and others have reported on the phenomenon.

Here’s a list — relying on a search strategy developed by Guillaume Cabanac, who has been posting the results on PubPeer — of such papers that we’ll keep updated regularly. We also recommend Alex Glynn’s Academ-AI. Have a suggested entry? Use this form.

Share this post